In my recent series of posts I covered how to implement a better integration between Dynamics 365 and SharePoint. One of the common scenarios that you will encounter if you’ve integrated the systems is the requirement to move Email Attachments from Dynamics 365 to SharePoint. In my implementation a lot of my sites have more than one Document Library, and Users want to be able to select a specific library for their attachments to be uploaded to for each email than having them all go to one standard location and then having to be moved after they’ve been uploaded to SharePoint.

The Solution

The solution I developed to enable this was to use Teams Adaptive Cards. I have created a Flow that is triggered when a User tracks an email against an Account; the Flow sends the User an Adaptive Card in Teams which has a list of the available Document Libraries on the SharePoint site related to the Account, and asks the User to select which one they want to upload the files to. After a Document Library is selected the Flow then moves the attachments to SharePoint. I’ll run through the details below:

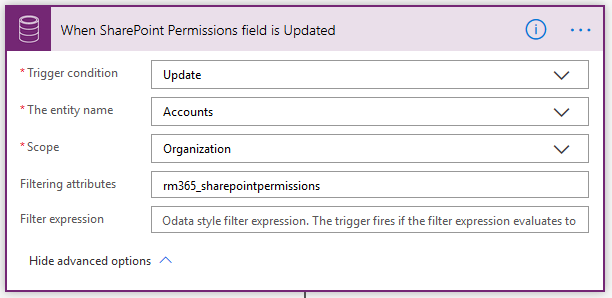

1. When a Tracked Email is Created – the first step is to define the trigger, and for this I’m using the Common Data Service (Current Environment) connector, and the “When a Record is Created, Updated or Deleted” trigger. In this instance I used the Filter Expression below to ensure the Flow is only triggered on emails that have attachments, and that are Set Regarding an item

_regardingobjectid_value ne null and attachmentcount gt 0

2. Get User who Created Email – next I use the Get Record action to retrieve the User record for the User who tracked the email into the system. ONe thing to note here is that I’m using the value in the “Owner” field rather than the “Created by” field. This is because when an email is tracked on creation it is created by the SYSTEM account and then ownership is assigned to the tracking User. We get the User details so that we can retrieve their email address for use later in the Flow

3. Get Regarding Account – we use another Get Record action to retrieve the details of the Account against whom the email is tracked. Note that in your environment if you have multiple entities enabled for email integration then you may wish to implement a Switch action here so you can retrieve the details of any record that you might be tracking emails against; for the purposes of this demo I’ve kept it simple. We need to retrieve the Account record so we can use some of the values in our adaptive card later in the Flow.

4. List Document Locations related to Account – next we want to retrieve all of the related Document Locations for the Account as each Document Location represents a SharePoint Document Library, so we’ll use a List Records action with the following filter query:

_regardingobjectid_value eq @{triggerOutputs()?['body/_regardingobjectid_value']}

We’ll loop through these Document Locations to create the ChoiceSet for our adaptive card in the next steps.

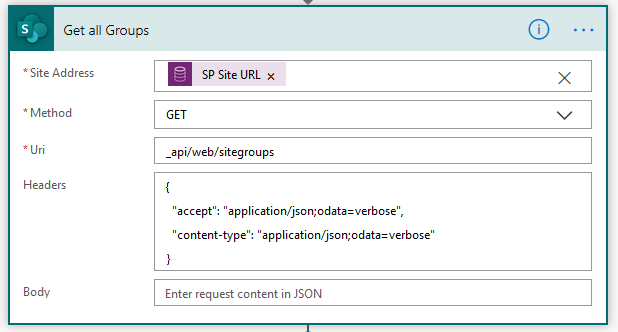

5. Initialize Choices Array variable – we need to use an “Initialize Variable” action to create an empty array that we can use when we loop through the Document Locations we returned above.

6. Apply to Each Document Location – we’ll use an Apply to Each control to loop through each Document Location we returned in Step 4

6A. Append to Choices Array – for each Document Location we’re going to use an Append to Array Variable to create a new element in the Choices Array we created above. This array will be used in the Adaptive Card we create later in the Flow. The array value we use is:

{

"value": "@{items('Apply_to_each_Document_Location')?['sharepointdocumentlocationid']}/@{items('Apply_to_each_Document_Location')?['name']}",

"title": "@{items('Apply_to_each_Document_Location')?['name']}"

}

One of the things you may notice is that for the “Value” in the array element I’ve constructed it as [GUID_OF_DOCUMENT_LOCATION]/[DOCUMENT_LOCATION_NAME]. The reason for this is that the choice a User makes will return that value to the Flow and I need both the GUID and the Name later in the Flow, so I concatenate them just now and then I’ll split them after they get returned.

A sample of the output from this step is below:

{

"value": "6127202a-b958-ea11-a811-000d3a86ba0b/Communication Info",

"title": "Communication Info"

}

7. Ask User which Document Library for Uploads – this is the key action in the Flow. We’re using the “Post an Adaptive Card to a Teams user and wait for a response” action. The adaptive cards are created with JSON, and fortunately there are some great samples available at https://adaptivecards.io/samples/. I also found the “Utimate Guide” written by Tomasz Poszytek to be incredibly helpful.

The card I designed looks like this:

The JSON I used to create my card is:

{

"type": "AdaptiveCard",

"$schema": "http://adaptivecards.io/schemas/adaptive-card.json",

"version": "1.0",

"body": [

{

"type": "TextBlock",

"size": "Medium",

"weight": "Bolder",

"text": "Upload Attachments to SharePoint"

},

{

"type": "ColumnSet",

"columns": [

{

"type": "Column",

"items": [

{

"type": "TextBlock",

"weight": "Bolder",

"text": "@{triggerOutputs()?['body/subject']}",

"wrap": true

},

{

"type": "TextBlock",

"spacing": "None",

"text": "Created @{formatDateTime(utcnow(), 'dd MMMM yyyy HH:mm')}",

"isSubtle": true,

"wrap": true

}

],

"width": "stretch"

}

]

},

{

"type": "TextBlock",

"text": "You tracked an email against @{outputs('Get_regarding_Account')?['body/name']}. Please select a Document Library from the List below to upload the attached documents to",

"wrap": true

},

{

"type": "FactSet",

"facts": [

{

"title": "Account:",

"value": "@{outputs('Get_regarding_Account')?['body/name']}"

},

{

"title": "SharePoint Site:",

"value": "@{outputs('Get_regarding_Account')?['body/rm365_spsiteurl']}"

},

{

"title": "Subject:",

"value": "@{triggerOutputs()?['body/subject']}"

},

{

"title": "Sent To:",

"value": "@{triggerOutputs()?['body/torecipients']}"

},

{

"title": "Sent From:",

"value": "@{triggerOutputs()?['body/sender']}"

}

]

},

{

"type": "Input.ChoiceSet",

"id": "CompactSelectVal",

"value": "1",

"choices":

@{variables('choices')}

}

],

"actions": [

{

"type": "Action.Submit",

"title": "Submit"

}

]

}

As you can see, in Line 71 I’m using the Choices Variable to set the ChoiceSet for the User.

I also use the User’s Primary Email address that I got in Step 2 to specify who I will be sending the adaptive card to:

The output from the Adaptive card is JSON that looks like:

{

"responseTime": "2020-04-02T15:38:10.0668583Z",

"responder": {

"objectId": "[GUID]",

"tenantId": "[GUID]",

"email": "user@emailaddress.com",

"userPrincipalName": "user@emailaddress.com",

"displayName": "Ryan Maclean"

},

"submitActionId": "Submit",

"data": {

"CompactSelectVal": "9a819bbc-4d56-ea11-a811-000d3a86ba0b/Contracts"

}

}

We’ll be using the information returned in the Data section in the next two steps.

8. Compose DocLib Name – for this step we’ll be using a Compose action with a substring expression to extract the Document Library name that we concatenated with the GUID above. The GUID is a 36 character string, so we can set the startindex of our substring to 36. The expression we use is:

substring(outputs('Ask_User_which_Document_Library_for_Uploads')?['body']?['data']?['CompactSelectVal'],36)

9. Compose DocLib GUID – in this step we’re doing the exact same as the step above, but in this case we’ll set the startindex of our substring to 0 and the length to 36:

substring(outputs('Ask_User_which_Document_Library_for_Uploads')?['body']?['data']?['CompactSelectVal'],0,36)

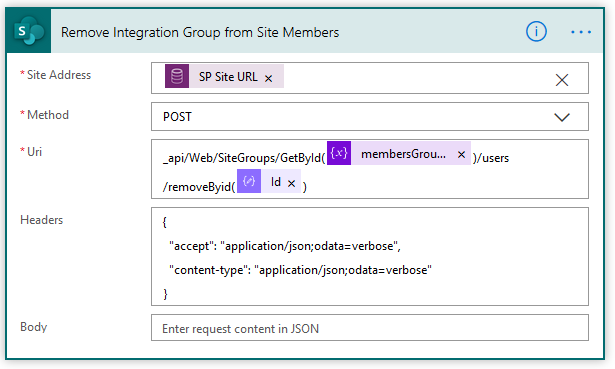

10. Get AbsoluteURL for SharePoint Document Library – in this step we’re going to be using the HTTP with Azure AD connector and the Invoke an HTTP request action to query the Web API with the RetrieveAbsoluteAndSiteCollectionUrl Function so we can return the Site Collection URL for our SharePoint site and get the absolute URL for the specific Document Location the User selected. All credit for this goes to Thomas Peschat. His blog covers the full detail of this step, so I’d recommend reading it for more information.

In the Url of the request I’m using the GUID that I retrieved as an output from Step 9 above to specify the document library I want the URLs from.

11. Compose AbsoluteURL – in this step we’re using a Compose action to extract the AbsoluteURL of the Document library from the output of the step above

12. Compose SiteCollectionUrl – as above, we’re using a Compose action to extract the SiteCollectionUrl from the output of Step 10

13. List Email Attachments – in order to get the Attachment details from D365 we need to do a List Records action on the activitymimeattachment entity. This won’t appear in the dropdown list so you’ll need to enter it as a custom value. Our filter query will be:

_objectid_value eq @{triggerOutputs()?['body/activityid']}

14. Apply to each Attachment – we’ll use an Apply to Each control to loop through each Attachment we returned in the previous step

14A. Create file in selected SharePoint Document Library – for each Attachment from our D365 email we’ll create a file in the SharePoint Document Library using a Create File action. The key elements are:

- Site Address – we use the SiteCollectionUrl output from the compose step 12 above

- Folder Path – we use the DocLibName output from the compose step 8 above

- File Name – we use the File Name from the attachment

- File Content – the file content needs to be in binary format, but in D365 it is stored in base64 format, so we need to use a base64ToBinary expression

You may also wish to add a final step to your flow to delete the attachments from Dynamics 365 after they’ve been uploaded to SharePoint.

Conclusion

This is my first attempt at using Teams Adaptive Cards and I’m really impressed with the flexibility they offer. At the moment they wait for up to 30 days for an input from a User, I’d like it if I could change that and also offer a default resolution if no option is selected.

I think this approach offers a good degree of flexibility to Users to enable the migration of email attachments from D365 to SharePoint, and I believe the use of Adaptive Cards will help with adoption. I’m also experimenting with Outlook actionable messages to offer similar functionality.

I’d love to hear your feedback on whether you think this approach could work for you, so please feel free to reach out to me on social media or drop a comment below!